Data Patterns

Checkout lesson on UDEMY Section previewShared database

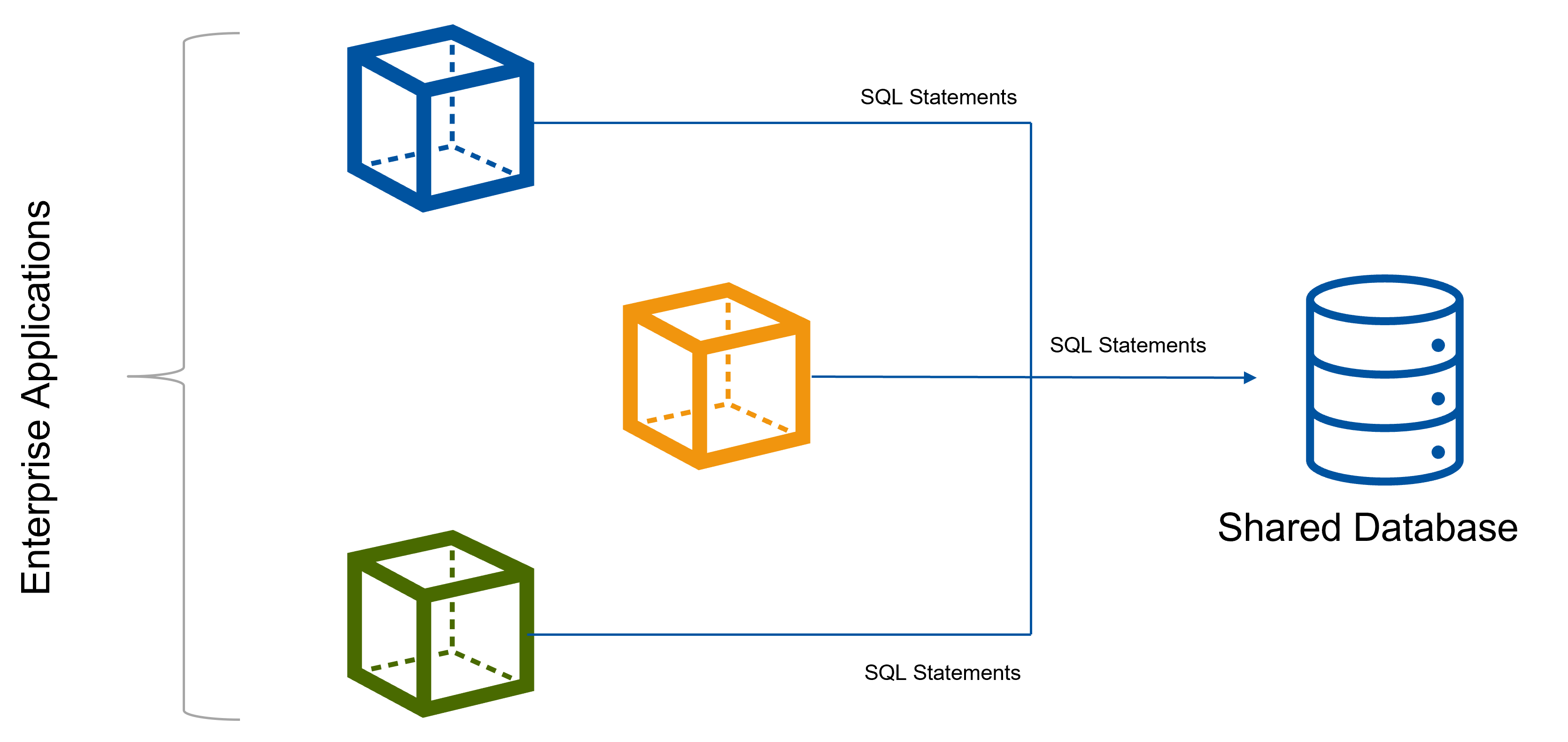

In legacy systems it is a common practice to use a shared database for multiple applications.

The pros of this approach are:

- Simplified data management

- Cost savings on Database (licensing, servers …)

- Centralized database administration

Challenges#1

All apps are database schema aware, meaning that code has direct dependency on table/colmn names etc.Changes to database schema need to be managed carefully:

- High risk

- High cost

- Loss of agility

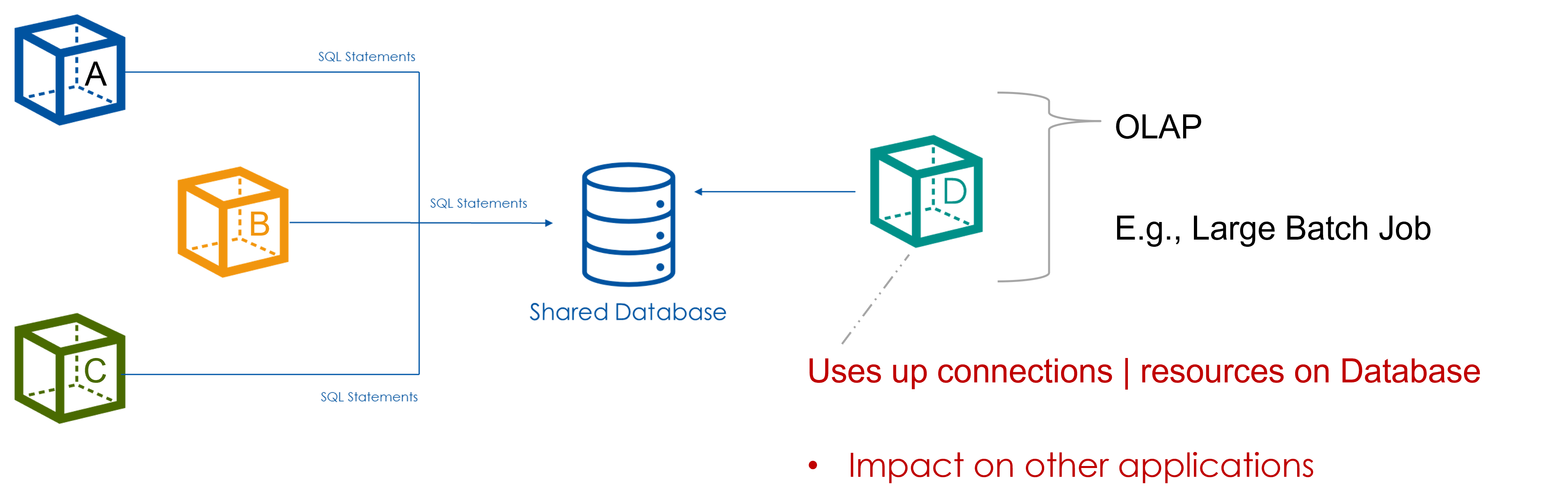

Challenges#2

One application may negatively impact all applications. In this illustration application D initiates a huge reporting batch job that has negatively impacted the experience of users with OLTP apps A, B & C.

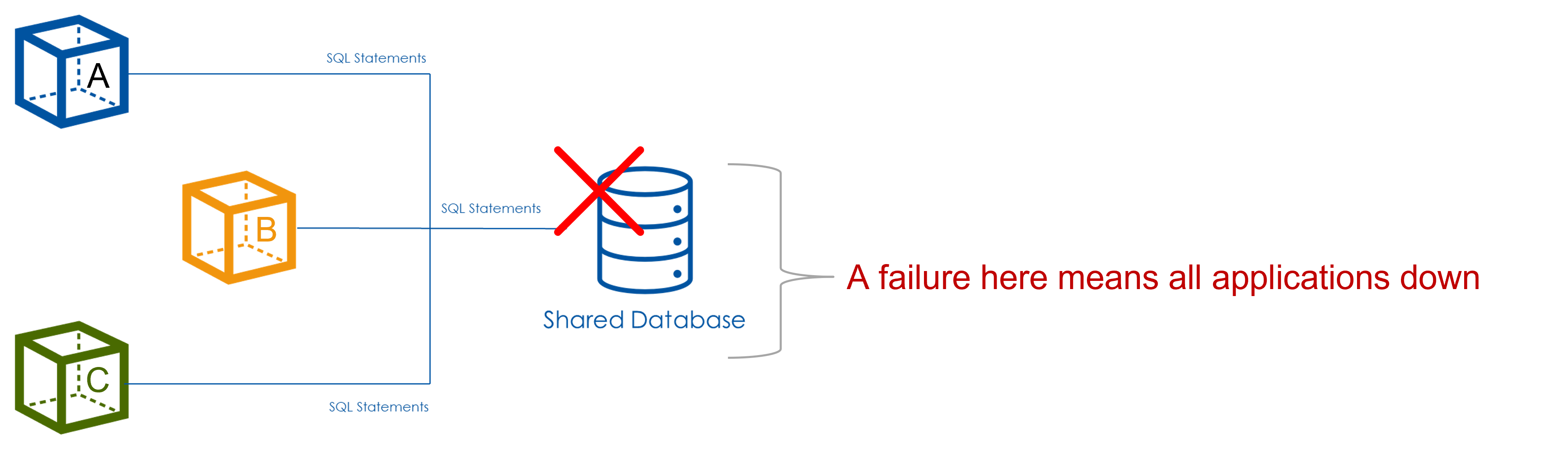

Challenges#3

The shared database represents a Single Point of Failure. If there is an outage on the database, all applications dependent on the database will become unavailable.

Challenges#4

Capacity planning is needed to ensure that enough capacity is available for growth across all applications.

Over capacity is a common practice to prevent risk of outage. This is a waste of $$ as the excess capacity will not be used :(

Challenges#5

Application teams are tied to a single type of database. Relational databases are commonly used as shared database. Applications are unable to take advantage of other database engines, which may be more suitable for their apps.

Overcoming the challenges

In early 2000s, designers started to use Service Oriented Architecture (SOA) to address some of these challenges.

All of these challenges are addressed in microservices architecture by using a pattern known as Separate Database Pattern